The coronavirus pandemic is pushing the bounds of science and technology. The speed and efficacy of...

Five Research Pitfalls to Watch Out For

←Back to resources

What differentiates a good study from a bad study?

The best new research has the potential to change the world. Good studies have the evidence to back up real scientific progress. But maybe an easier question to answer is: how does one avoid wasting a good study’s potential with poor research practices?

There are so many places that good science can run astray. That includes from the research design phase, to conducting experiments, to running analysis, writing, and publishing. Here are five essential but common research pitfalls—and how you can avoid them to help your research achieve its fullest potential.

Failing to fully assess existing research

This is perhaps the single hardest pitfall to avoid. That’s because there is no way to possibly ‘fully assess’ existing research. It’s simply impossible.

Think of it this way: more than seven million research papers are published every year. Even within a niche field, there’s just no way that an individual scientist can have the time to read all of the published studies!

That doesn’t mean one should simply give up. Chances are that no matter your research query, others have thought of it too and have made attempts to investigate it in the past. The first mistake any scientist can make is to assume that a research question has neverbeen studied before. Scientists agree that researchers should always assume that their question has already been answered in some form—making the core job of the researcher to uncover that existing literature, and use it in designing their research study.

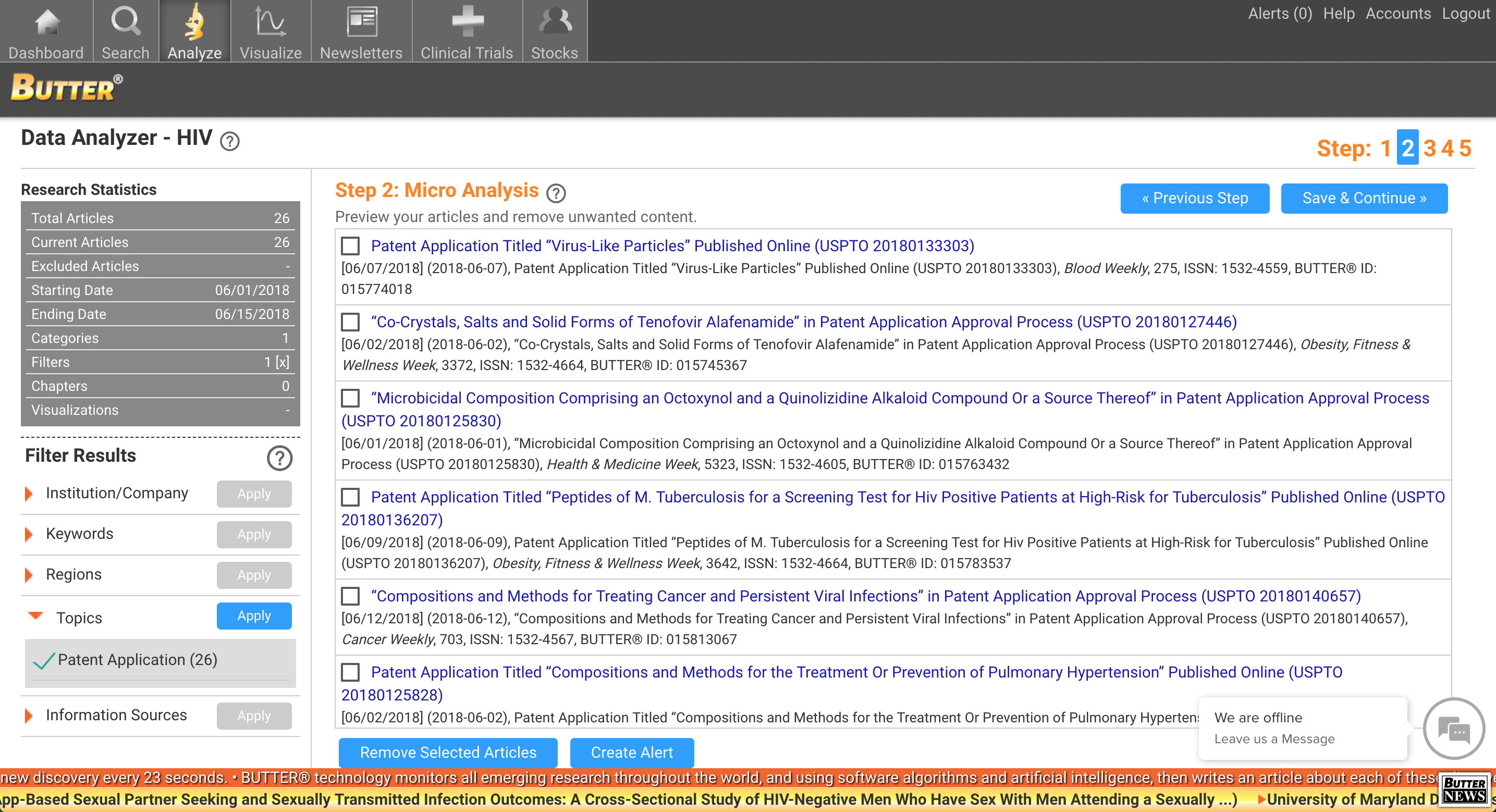

A comprehensive literature review is challenging, but it can be made much easier with the aid of technology. This could mean academic or publisher journal alerts from relevant sources like the National Academies of Science, Engineering, and Medicine, which provides alerts on 20-25 broad scientific topics. Academic aggregators, such as EBSCO, Proquest, and Cengage, have access to thousands of research journals and provide alerts on publications and sometimes topics.

A more comprehensive solution comes in the form of BUTTER, a research tool with tens of thousands of peer-reviewed and global sources. BUTTER provides alerts for authors, topics, keywords, including brief reports on the scholarly articles within the database, summarizing and describing each study. A tool like BUTTER, which allows a user to search for, analyze, and track research, can help scientists assess the existing research as much as is realistically possible.

Incomplete preparation for experiment

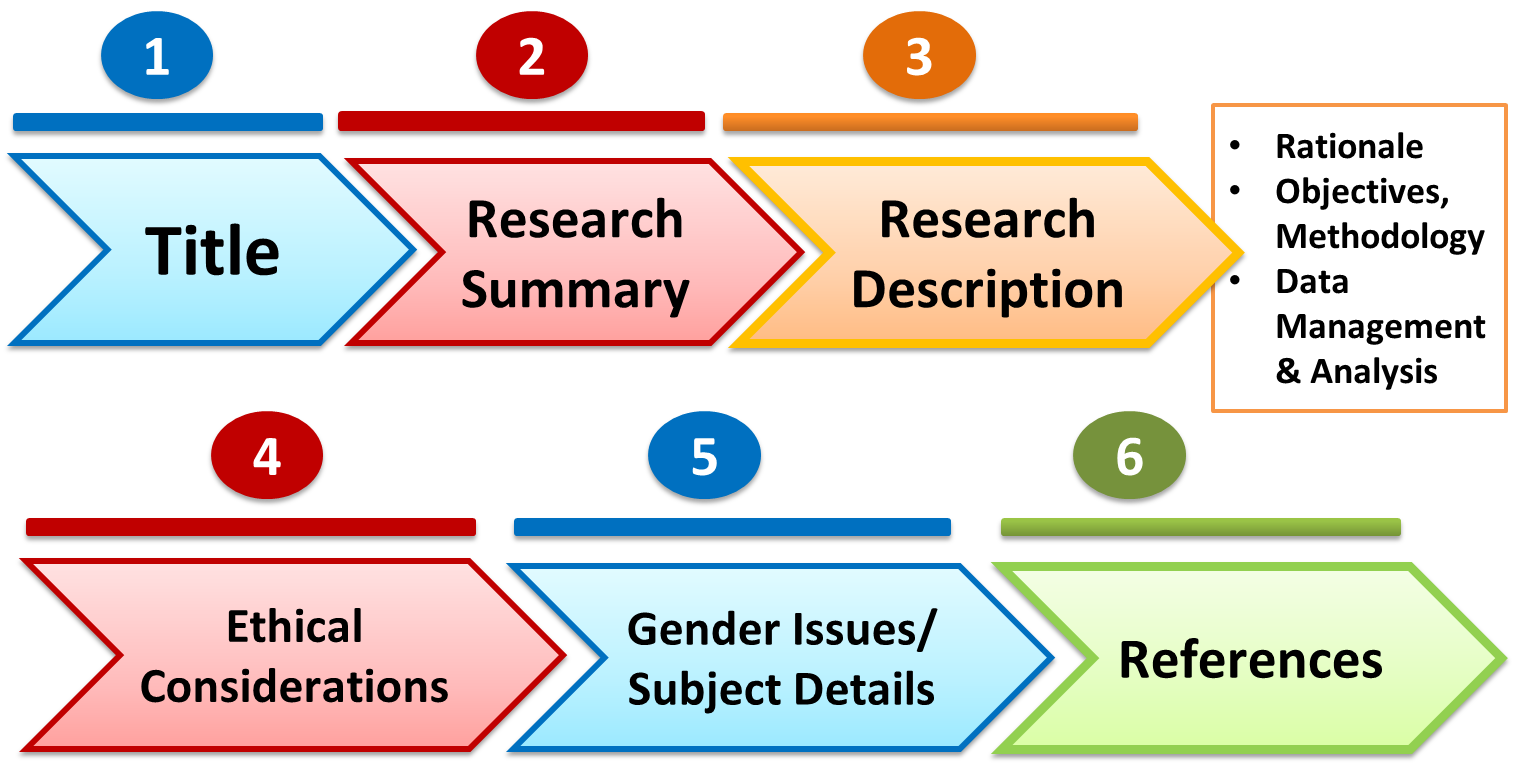

Much of the groundwork for a great study is laid before the actual experiment or data collection begins. That goes for assessing the existing research, but it also goes for study design.

A fully developed protocol for any study is critical. Researchers should also be sure to present that protocol to a peer group that can provide critical comments and suggestions for improvement. Being well-prepared involves a detailed written protocol, consulted frequently during the experiment and data collection phase.

Don’t skip any steps before the experiment phase begins. This includes performing a sample size analysis and implementing bias control measures. Not conducting these steps in advance can singlehandedly damage an otherwise good research study.

Computer programs can help a researcher figure out how many subjects are needed to create a sound study. But in order to conduct a sample size analysis, researchers will also need to achieve a sound understanding of their own data. Meanwhile, some measures for bias control include randomizing subjects, measuring and analyzing subjects while remaining blind to the subject status, having a credible control condition, and ensuring that the study subjects are unaware of which group they belong to.

Laboratory procedural mistakes

Laboratory procedures need to be followed for good reason. Utmost vigilance is required to not make small mistakes that can nonetheless have severe consequences on the integrity of the experiment. This starts with good documentation. Laboratory notebooks must have detailed information so that an experiment that works can be consistently reproduced. Researchers should record everything—all materials used, nuances of methods applied, anything atypical that occurs during the experiment.

A common laboratory mistake is related to reagents and equipment. Researchers should assess reagent expiration dates and make sure that reagents have been stored properly (i.e. refrigerated vs. frozen). In addition, failing to optimally maintain equipment can lead to false results. Scientists must perform standard maintenance and check that certifications are up-to-date, and never cut corners with calibrating equipment. A good example of this from Oxford University Press is calibrating micropipettes.

Statistical analysis mistakes

Much can also go wrong when analyzing one’s data from a completed experiment. A few error-prone areas to keep track of in particular include data normality, missing data, and power calculations.

A normal distribution means that it’s more likely for a random variable underlying the dataset to be normally distributed. Normal distributions are required for more sensitive data analysis methods like parametric statistical analysis, and are considered preferable. Researchers should always check the normality of their dataset.

For missing data and dropped subjects, researchers need to report all dropped data, missing data, and dropped subjects, regardless of the method used. Experts say to always choose to exclude subjects rather than avoiding too many drop-outs.

Failing to perform a power calculation on one’s study is another easy slip-up. Power calculations are a statistical tool to help determine sample size, and there are multiple software programs that allow researchers to determine the power of their results. Correlation does not equal causation, and performing a power calculation can help one avoid implying incorrect associations.

Failing to assess the best avenue for publication

So you’ve completed your research study, avoiding all the pitfalls thus far—congratulations! But there’s one last pitfall that awaits in the publication process. While all researchers will be anxious to try to get their study into the highest profile journal they possibly can, the 2020s offer a truly diverse array of options for publication. Researchers should carefully consider all the different avenues available.

.jpeg?width=232&name=download%20(4).jpeg)

First, one should decide if they want to pursue peer-review publication or instead publish in a preprint. Preprints will get one’s research out into the world quickly and in a completely accessible format. While preprints can allow poor research to circulate, they are a useful tool for scientists to share work with the world. But it’s not a binary choice: nowadays, many researchers opt for both, putting out a preprint and then pursuing peer-review. In fact, several publishers want to see articles published as preprints first before being accepted for peer review.

If/when researchers decide to go with peer review, they must carefully consider their journal choices when submitting. Factors at play include journal reputation from scores like JIF, whether the journal is open access and if so to what degree, and of course, the peer authors and studies present within each publication. Consider your personal goals and the objective of the research when deciding where to submit your completed, pitfall-free research study.

Scientific research is an exhaustive, complicated process. We hope that pointing out these pitfalls will help make your next study go more smoothly.

.jpg?width=50&name=DSC_0028%20(1).jpg)