←Back to resources Every day we face an Internet ruled by unverified sources. We need to be able to...

Is Journal Impact Factor Good?

The debate over Journal Impact Factor (IF) rages on, with no end in sight. Most scientists and experts agree that it is a flawed measurement of how good academic journals are. Yet it may be the best measure out there, and it continues to be broadly used across research and academia.

At the same time, the emergence of preprints, the rise of open access, and the dominance of social media means that the research landscape is now more divergent than ever from the metrics that IF measures. IF is perhaps more useless than it ever has been—although it still remains a useful tool to be used with caution and care.

To start, what exactly is IF?

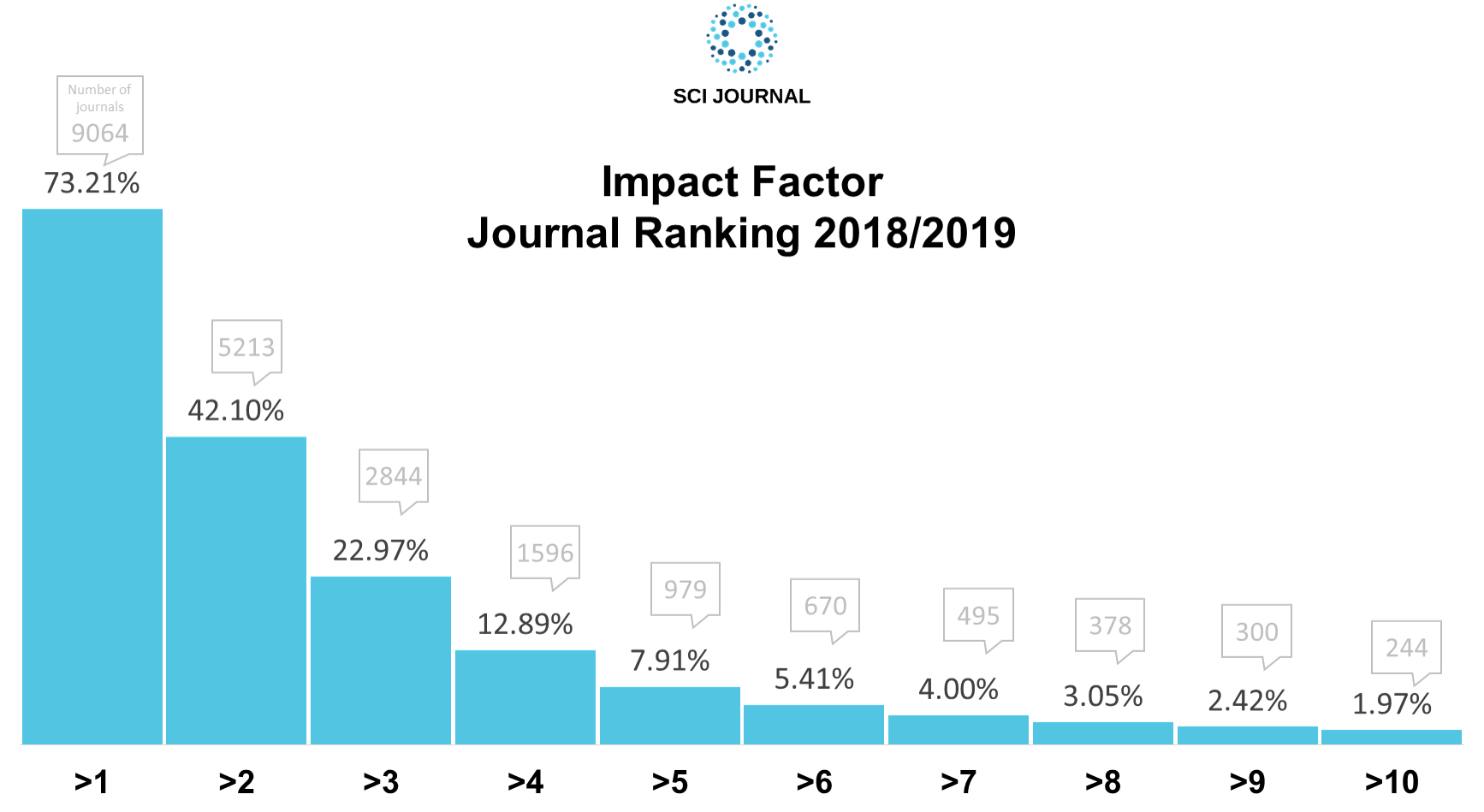

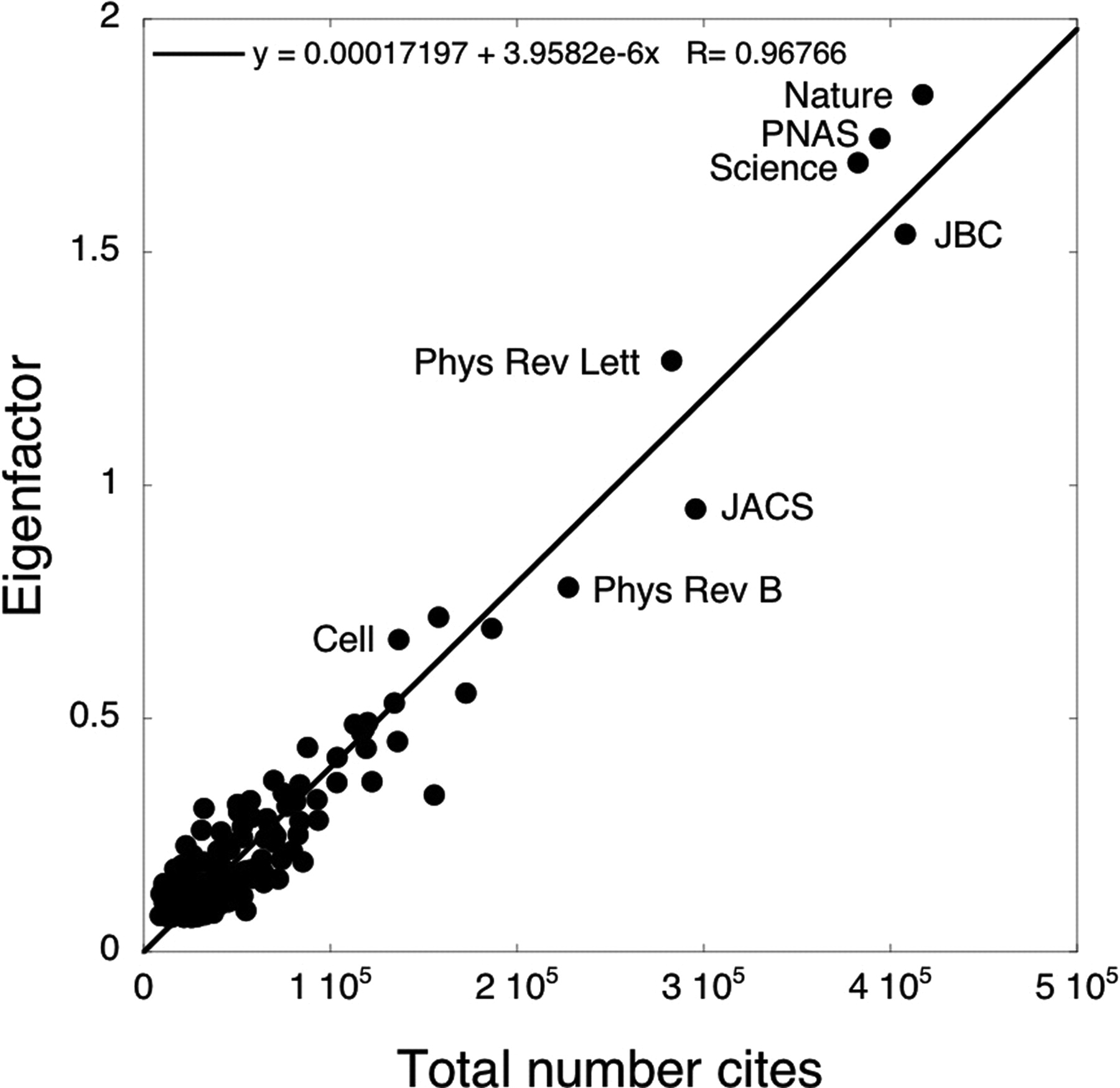

IF is a calculation of the frequency that the average article in a journal is cited. It was originally created by Thomson Reuters to help librarians identify journals to add to library holdings, and is now run by Clarivate. Other common metrics include CiteScore, Eigenfactor, and h-index, each of which measure slightly different factors.

IF in particular essentially measures the average quality of articles in a journal: journals where many of the articles are well-cited will perform better in IF. If a journal has many articles but only a few perform well, it will do worse.

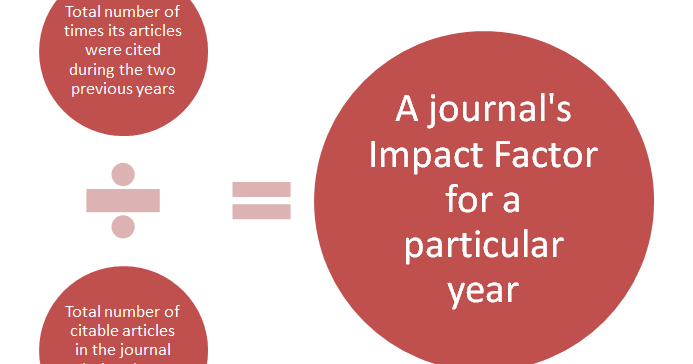

To be specific, here’s how the calculation works:

A = the number of times articles published in the previous two years were cited by indexed journals this year.

B = the total number of "citable items" published in the previous two years.

A/B = This year’s impact factor

Impact factor is useful, especially when compared to other calculations of journal quality, because it eliminates certain biases in calculations. For example, measures that favor larger journals, newer journals, or more frequently issued journals. However, the measure has shown to be highly variable from year to year. More importantly, scientists argue that judgment of a journal’s quality should never be extended as a measure of the scientists that publish the article.

And yet that is often exactly how IF is used in academia—as a measurement of a scientist’s accomplishments and qualifications. Aspiring professors and those in academia are frequently told that getting research published in high-impact journals is essential to career advancement, or even that publishing in low-tier journals can make them look bad.

A recent survey found that almost half of research-intensive universities consider IF when deciding who to promote. In China, many universities even pay impact-factor-related bonuses.

The usefulness of IF, in some ways, becomes its downfall. Since you can use it to rank and score the outputs of scientists and journals, it is tempting to do so—when doing great science does not necessarily overlap with a journal’s ratio of citations over the last two years. Studies show that highly original research is more likely to be cited after three or more years.

Even worse, IF can be manipulated by editorial policy, when publications willfully increase journal self-citations, publish reviews rather than articles, and publish a lot of expert-based guidelines that can be widely used.

A potential solution?

The San Francisco Declaration on Research Assessment (DORA) was conceived in December 2012 as a new way to evaluate scientific research. The goal, as stated by the chair of DORA’s steering group, Stephen Curry, is “a world in which the content of a research paper matters more than the impact factor of the journal in which it appears.”

Hundreds of universities so far have signed on to the agreement, which makes the following recommendations, among others:

- Eliminate the use of IF in funding, appointment, and promotion considerations

- Assess research on its own merits, rather than the journal it is published in

- Reduce the promotion and advertisement of IF scores

- Cite primary literatures rather than reviews

And while DORA has made headway, garnering support from researchers around the world, IF remains stubbornly entrenched even in 2020. Scientists have argued for the need to recognize contribution to the scientific community in ways beyond citations, such as public engagement, internal committees, and teaching. Above all, institutions should be transparent about whether or not they are using IF to make decisions so that scientists know what they’re getting into.

So how should we use IF?

How about as what it is—a measure of citation frequencies. We should use IF as a measure of which journals have the most highly-cited studies. And that’s it.

For librarians, researchers in the literature-review stage, it’s a useful tool. But for evaluating an individual piece of research—and especially a scientist’s career—it is not.

.jpg?width=50&name=DSC_0028%20(1).jpg)